Tagged: Google, web core vitals

-

AuthorPosts

-

September 16, 2023 at 12:43 am #1419316

Hello,

I hope you can help us in optimizing our website for Google’s web core vitals.

We suddenly started failing these metrics, The main issue that’s causing this is the excessive DOM size.

It’s connected to the style elements and layouts. I wonder if there’s a way to optimize this so that we pass the web core vitals as it’s a big ranking factor now.

ThanksSeptember 16, 2023 at 10:25 am #1419320Do you belong to your website?

You are still on enfold 4.2 ?

Think of your images on uploading. First do you realy need png files overall? f.e. – your content-slider Mena-Info as png 437kb as jpeg : 53kb.

etc. pp. – if your Partners do offer svg files for their logos ( Global Cyber Aliance) use them instead.You haven’t activated gzip for your homepage!

Ask your provider if modules are installed ( f.e. mod_deflate) if so – we can activate the compression by a htaccess fileSeptember 16, 2023 at 12:24 pm #1419330Hello,

Our website is powerdmarc.com and yes we are using enfold.

You checked another website?September 16, 2023 at 1:15 pm #1419332Hi,

There’s no way of changing the DOM tree unfortunately, but I guess you could try lazy loading html like the test suggests? https://support.nitropack.io/hc/en-us/articles/17144942904337?utm_source=lighthouse&utm_medium=lr

Best regards,

RikardSeptember 16, 2023 at 4:06 pm #1419334And you do not like what you see? ;)

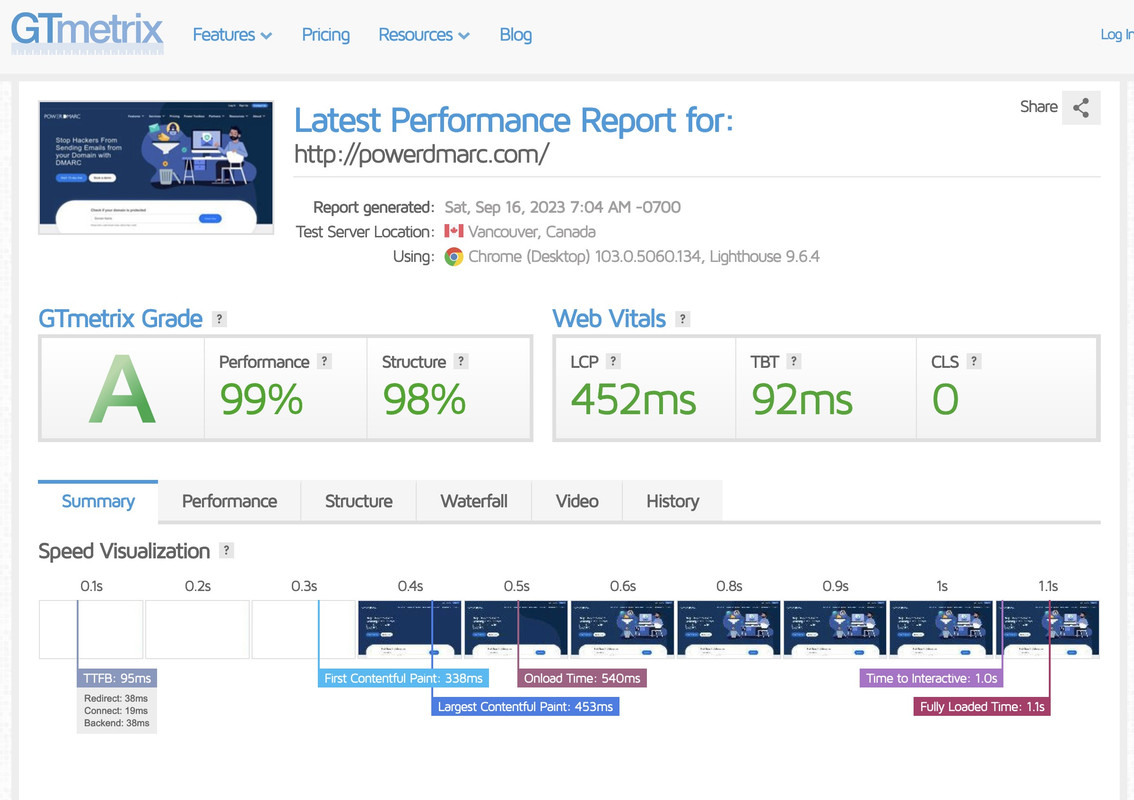

Gtmetrix uses lighthouse too – so i can not believe that the LCP and FCP is so bad on pagespeed and on Gtmetrix not.A fantastic TTFB !

Given the load times, I wouldn’t care about the number of DOM elements.

PS : that page does not belong to you: https://menainfosec.com/

PPS: brotli is even better than mod_deflate / gzip ;)

-

AuthorPosts

- You must be logged in to reply to this topic.